Show this engraving to a

nearby tame adult, and he or she will probably guess know that this is “The

Lady with the Lamp”, Florence Nightingale, who turned nursing into a

profession. Tame adults are unlikely to know that the engraving was by

James-Charles Armytage, and they are even more unlikely to know what Florence

Nightingale did for statistics, using them to argue for reforms in nursing.

To

rally public support for nursing reforms, she wrote a pamphlet called Mortality in the British Army, and this

was the first use of pictorial charts to present data. She

invented all those diagrams in the financial pages, with wheat bags, or oil

barrels or human figures lined up like so many paper dolls. She hammered away

again in 1858 in her Report on the Crimea:

It is not denied that a large part of the British force perished from causes not the unavoidable or necessary results of war...(10,053 men, or sixty percent per annum, perished in seven months, from disease alone, upon an average strength of 28,939. This mortality exceeds that of the Great Plague)...The question arises, must what has here occurred occur again?

In 1858, Nightingale was

elected to the newly formed Statistical Society and turned her attention to

hospital statistics on disease and mortality. Now read on…

This entry began as two radio talks delivered on the ABC, more

than thirty years ago. My friend Peter Chubb asked me later if I had addressed

these issues in a book that was in progrtess, and I said that I hadn’t, but

that I had provided a link to the text of the talk. Two nights later, I decided

to add it to then book, the next night, I rewrote it. Here is a tweaked

version.

There is enough information here to let readers try this

one-paragraph exercise in Evil Statistics out for themselves.

Boris, Don and Scotty went fishing, and

caught ten fish. Four weighed 1 kg, two were 2 kg, two were three kg, one was 6

kg, and one was 10 kg. They reported that the average was 1 kg, 2 kg and 3 kg,

and all were telling a sort of truth. Boris reported the mode, the most common mass, Don reported the median, the masses of the middle two fish, Scotty reported the mean, adding all the masses and dividing

by ten. Each value was true, each was different.

It all sounds a

bit like “Lies, damned lies, and statistics”, but who first said that? The

popular myth is that it was Mr. Disraeli, the well-known politician, but many

quite reputable and reliable reference books blame author Mark Twain.

It turns out that

it was first published by Twain all right, but Twain attributed the line to

Disraeli, and you won't find the story in any earlier publication than Twain's

autobiography. In short, Mark Twain made the whole thing up! Disraeli never

spoke those words: Twain invented them all, but he wanted the joke to have a

greater force, and so gave the credit to an English politician.

Twain wasn't only

well-known for his admiration of a good “Stretcher” (of the truth, that is), he

even lied when he was talking about lies, and his name wasn't even Mark Twain,

but Samuel Clemens! Now would you buy a used statistic from this man?

Last century, when

Disraeli is supposed to have made the remark, statistics were just numbers

about the State. The state of the State, all summed up in a few simple numbers,

you might say.

Now governments

being what they are, or were, there was more than a slight tendency in the

nineteenth century to twist things just a little, to bend the figures a bit, to

bump up the birth rate, or smooth out the death rate, to fudge here, to massage

there, to adjust for the number you first thought of, to add a small conjecture

or maybe to slip in the odd hypothetical inference.

It was all too

easy to tell a few small extravagances about one's armaments capacity, or to

spread the occasional minor numerical inexactitude about whatever it was rival

nations wanted to know about, and people did just that. Even today, when

somebody speaks of average income, if you don’t smell fish, at least remember

them, and ask if that’s the mean, the median or the mode.

When I was young,

I smoked cigarettes, but the cost and the health risks convinced me, so I

stopped, back in 1971. Smokers think we reformed smokers are tiresome people

who keep on at them, trying to get them to stop as well.

The non-smokers

say those who still puff smoke are the tiresome people, who can't see the

carcinoma for the smoke clouds, who deny any possibility of any link between

smoking and anything. Like the tobacco pushers, the smokers dismiss the figures

contemptuously as “only statistics”. The really tiresome smoker will even say a

few unkind things about the statisticians who are behind the figures. Or about

the statisticians who lie behind the figures.

By the end of the

19th century, statistics were no longer the mere playthings of statesmen, they

were way to clump large groups of related facts into convenient chunks. If you

can see how the statistics were arrived at, perhaps you can trust them.

At one stage in my

career, I led a gang of people who gathered statistics and messed about with

numbers, but we preferred to be called ‘number-crunchers’. People say a

statistician is “somebody who's rather good around figures, but who lacks the

personality to be an accountant”.

They speak of the

statistician who drowned in a lake with an average depth of 15 cm. We are told

that a statistician collects data and draws confusions, or draws mathematically

precise lines from an unwarranted assumption to a foregone conclusion. They say

“X uses statistics much as a drunkard uses a lamp-post: rather more for support

than for illumination”.

Crusty old

conservatives give us a bad name, pointing out that tests reveal that half our

nation's school leavers to be below average, which is true, but it is equally

true that the vast majority of Australians have more than the average number of

legs. Think about it: all you need is one Australian amputee!

If somebody does a

Little Jack Horner with a pie that's absolutely bristling with statistical

items and they produce just one statistical plum, I won't be impressed at all:

the plum's rather more likely to be a lemon, anyhow. The statistics have to be

plausible and significant. Later, I will show you a statistical link between

podiatrists and public telephones: this is obviously nonsense, and we should ignore it. There is no logical reason for either to influence the other.

Still, unless

there is a plausible reason why X might cause Y, it's all very interesting, and

I'll keep a look-out, just in case a plausible reason pops up later, but I

won't rush to any conclusion. Not just yet, I won't.

First, I will

check on the likelihood of a chance link, something we call statistical

significance. After all, if somebody claims to be able to tell butter from

margarine, you wouldn't be too convinced by a single successful demonstration,

would you? Well, perhaps you might be convinced: certain advertising agencies

think so, anyway.

If you tossed a

coin five times, you wouldn't think it meant much if you got three heads and

two tails, unless you were using a double-headed coin, maybe. If somebody

guessed right three, or even four, times out of five, on a fifty-fifty bet, you

might still want more proof.

You should, you

know, for there's a fair probability it was still just a fluke, a higher

probability than most people think. There's about one chance in six of correctly

guessing four out of five fifty-fifty events. Here is a table showing the

probabilities of getting zero to five correct from five tosses:

|

zero

right

|

one

right

|

two

right

|

three

right

|

four

right

|

five

right

|

|

1/32

|

5/32

|

10/32

|

10/32

|

5/32

|

1/32

|

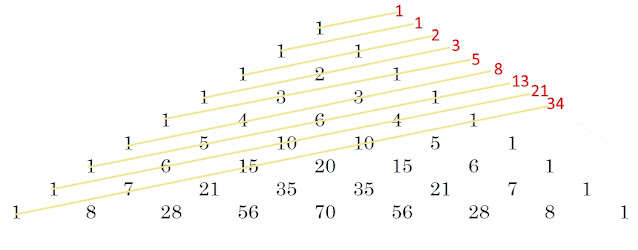

The clever reader may notice a

resemblance to Pascal’s triangle here! Now back to the butter/margarine study.

Getting one right out of one is a fifty-fifty chance, while getting two right

out of two is a twenty five per cent chance, still a bit too easy, maybe. So you

ought to say “No, that's still not enough. I want to see you do it again!”.

Statistical tests

work in much the same way. They keep on asking for more proof until there's

less than one chance in twenty of any result being just a chance fluctuation.

The thing to remember is this: if you toss a coin often enough, sooner or later

you'll get a run of five of a kind.

As a group,

scientists have agreed to be impressed by anything rarer than a one in twenty

chance, quite impressed by something better than one in a hundred, and

generally they're over the moon about anything which gets up to the one in a

thousand level. That's really strong medicine when you get something that

significant.

Did you spot the

wool being pulled down over your eyes, did you notice how the speed of the word

deceives the eye, the ear, the brain and various other senses? Did you feel the

deceptive stiletto, slipping between your ribs? We test statistics to see how

“significant” they are, and now, hey presto, I'm asserting that they really are

significant. A bit of semantic jiggery-pokery, in fact.

That's almost as

bad as the skullduggery people get up to when they're bad-mouthing statistics.

Even though something may be statistically significant, that's a long way away

from the thing really being scientifically significant, or significant as a

cause, or significant as anything else, for that matter.

Statistics make

good servants but bad masters. We need to keep them in their places, but we

oughtn't to refuse to use statistics, for they can serve us well. Now you are

ready to object when I assert that all the podiatrists in New South Wales seem

to be turning into public telephone boxes in South Australia, and it all began

with Florence Nightingale. Most people think of her as the founder of modern

nursing, but as part of that she created ways to use statistics to pinpoint

facts.

After her name was

made famous, directing nursing in the Crimean war, she returned to London in

1857, and started to look at statistics, and the way they were used. She wrote

a pamphlet called “Mortality in the British Army”, and the very next year, she

was elected to the newly formed Statistical Society.

She looked at

deaths in hospitals, and demanded that they keep their figures in the same way.

The Statistical Congress of 1860 had, as its principal topic, her scheme for

uniform hospital statistics. It isn’t enough to say Hospital X loses more

patients than Hospital Y does, so therefore Hospital X is doing the wrong

thing.

We need to look at

the patients at the two hospitals, and make allowances for other possible

causes. We have to study the things, the variables, which change together.

Statistics, remember, are convenient ways of wrapping a large amount of

information up into a small volume. A sort of short-hand condensation of an

unwieldy mess of bits and pieces.

And one of the

handiest of these short-hand describers is the correlation coefficient, a

measure of how two variables change at the same time, the one with the other.

Now here I'll have to get technical for a moment. You can calculate a

correlation coefficient for any two variables, things like number of cigarettes

smoked, and probability of getting cancer.

The correlation

coefficient is a simple number which can suggest how closely related two sets

of measurements really are. It works like this: if the variables match

perfectly, rising and falling in perfect step, the correlation coefficient

comes in with a value of one. But if there's a perfect mismatch, where the more

you smoke, the smaller your chance of surviving, then you get a value of minus

one.

With no match at

all, no relationship, you get a value somewhere around zero. But consider this:

if you have a whole lot of golf balls bouncing around together on a concrete

floor, quite randomly, some of them will move together, just by chance.

There’s no cause,

nothing in it at all, just a chance matching up. And random variables can match

up in the same way, just by chance. And sometimes, that matching-up may have no

meaning at all. This is why we have tests of significance. We calculate the

probability of getting a given correlation by chance, and we only accept the

fairly improbable values, the ones that are unlikely to be caused by mere

chance.

We aren’t on safe

ground yet, because all sorts of wildly improbable things do happen by chance.

Winning the lottery is improbable, though the lotteries people won't like me

saying that. But though it's highly improbable, it happens every day, to

somebody. With enough tries, even the most improbable things happen.

So here's why you

should look around for some plausible link between the variables, some reason

why one of the variables might cause the other. But even then, the lack of a

link proves very little either way. There may be an independent linking

variable.

Suppose smoking

was a habit which most beer drinkers had, suppose most beer drinkers ate beer

nuts, and just suppose that some beer nuts were infected with a fungus which

produces aflatoxins that cause slow cancers which can, some years later, cause

secondary lung cancers.

In this case, we'd

get a correlation between smoking and lung cancer which still didn't mean

smoking actually caused lung cancer. And that's the sort of grim hope which

keeps those drug pushers, the tobacco czars going, anyhow. It also keeps the smokers

puffing away at their cancer sticks.

It shouldn't, of

course, for people have thrown huge stacks of variables into computers before

this. The only answer which keeps coming out is a direct and incontrovertible

link between smoking and cancer. The logic is there, when you consider the

cigarette smoke, and how the amount of smoking correlates with the incidence of

cancer. It's an open and shut case.

I'm convinced, and

I hope you are too. Still, just to tantalise the smokers, I'd like to tell you

about some of the improbable things I got out of the computer in the 1980s.

These aren't really what you might call damned lies, and they are only

marginally describable as statistics, but they show you what can happen if you

let the computer out for a run without a tight lead.

Now anybody who's

been around statistics for any time at all knows the folk-lore of the trade,

the old faithful standbys, like the price of rum in Havana being highly

correlated with the salaries of Presbyterian ministers in Massachusetts, and

the Dutch (or sometimes it's Danish) family size which correlates very well

with the number of storks' nests on the roof.

More kids in the

house, more storks on the roof. Funny, isn't it? Not really. We just haven't

sorted through all of the factors yet. The Presbyterian rum example is the

result of correlating two variables which have increased with inflation over

many years.

You could probably

do the same with the cost of meat and the average salary of a vegetarian, but

that wouldn't prove anything much either. In the case of the storks on the

roof, large families have larger houses, and larger houses in cold climates

usually have more chimneys, and chimneys are what storks nest on. So naturally

enough, larger families have more storks on the roof. With this information,

the observed effect is easy to explain, isn't it?

There are others,

though, where the explanation is less easy. Did you know, for example, that

Hungarian coal gas production correlates very highly with Albanian phosphate

usage? Or that South African paperboard production matches the value of Chilean

exports, almost exactly?

Or did you know

the number of iron ingots shipped annually from Pennsylvania to California

between 1900 and 1970 correlates almost perfectly with the number of registered

prostitutes in Buenos Aires in the same period? No, I thought you mightn't.

These examples are

probably just a few more cases of two items with similar natural growth, linked

in some way to the world economy, or else they must be simple coincidences.

There are some cases, though, where, no matter how you try to explain it, there

doesn't seem to be any conceivable causal link. Not a direct one, anyhow.

There might be

indirect causes linking two things, like my hypothetical beer nuts. These cases

are worth exploring, if only as sources of ideas for further investigation, or

as cures for insomnia. It beats the hell out of calculating the cube root of 17

to three decimal places in the wee small hours, my own favourite go-to-sleep

trick.

Now let's see if I

can frighten you off listening to the radio, that insomniac's stand-by. Many

years ago, in a now-forgotten source, I read that there was a very high

correlation between the number of wireless receiver licences in Britain, and

the number of admissions to British mental institutions.

At the time, I

noted this with a wan smile, and turned to the next taxing calculation

exercise, for in those far-off days, all correlation coefficients had to be

laboriously hand-calculated. It really was a long time ago when I read about

this effect.

It struck me, just

recently (that was 40 years ago!), that radio stations pump a lot of energy into the atmosphere. In

America, the average five-year-old lives in a house which, over the child's

life to the age of five, has received enough radio energy to lift the family

car a kilometre into the air. That's a lot of energy.

Suppose, just

suppose, that all this radiation caused some kind of brain damage in some

people. Not all of them necessarily, just a susceptible few. Then, as you get

more licences for wireless receivers in Britain, so the BBC builds more

transmitters and more powerful transmitters, and more people will be affected.

And so it is my sad duty to ask you all: are the electronic media really out to

rot your brains? Will cable TV save us all?

Presented in this

form, it's a contrived and, I hope, unconvincing argument. Aside from anything

else, any physicist can tell you that the radiation used for radio transmission

is the wrong wave-length and lacks the energy needed to change any cells. My purpose

in citing these examples is to show you how statistics can be misused to spread

alarm and despondency. But why bother?

Well, just a few

years ago, problems like this were rare. As I mentioned, calculating just one

correlation coefficient was hard yakka in the bad old days. Calculating the

several hundred correlation coefficients you would need to get one really

improbable lulu was virtually impossible, so fear and alarm seldom arose.

That was before

the day of the personal computer and the hand calculator. Now you can churn out

the correlation coefficients faster than you can cram the figures in, with

absolutely no cerebral process being involved.

As never before,

we need to be warned to approach statistics with, not a grain, but a shovelful,

of salt. The statistic which can be generated without cerebration is likely

also to be considered without cerebration.

And that brings

me, slowly but inexorably to the strange matter of the podiatrists, the public

telephones, and the births.

Seated one night

at the keyboard, I was weary and ill at ease. I had lost one of those essential

connectors which link the parts of one's computer. Then I found the lost cord,

connected up my computer, and fed it a huge dose of random data.

I found twenty

ridiculously and obviously unrelated things, so there were one hundred and

ninety correlation coefficients to sift through. That seemed about right for

what I was trying to do. which was to show that figures may prove nothing at all.

When I was done, I

switched on the printer, and sat back to wait for the computer to churn out the

results of its labours. The first few lines of print-out gave me no comfort,

then I got a good one, then nothing again, then a real beauty, and so it went:

here are my cunningly selected results.

Remember: I have simply used, for honest reasons,

the methods of the crooks and con-men.

|

|

Tasmanian birth rate

|

SA public phones

|

NSW podiatrist registrations

|

|

Tasmanian birth rate

|

1

|

+0.94

|

-0.96

|

|

SA public phones

|

+0.94

|

1

|

-0.98

|

|

NSW podiatrist registrations

|

-0.96

|

-0.98

|

1

|

Well of course the podiatrists and

phones part is easy. Quite clearly, New South Wales podiatrists are moving to

South Australia and metamorphosing into public phone boxes. Or maybe they're

going to Tasmania to have their babies, or maybe Tasmanians can only fall

pregnant in South Australian public phone booths.

Or maybe

codswallop grows in computers which are treated unkindly. Figures can't lie,

but liars can figure. I would trust statistics any day, so long as I can find

out where they came from, and I would even trust statisticians, so long as I knew that they knew their own limitations. Most of the professional ones do know their

limitations: it's the amateurs who are dangerous.

I'd even use

statistics to choose the safest hospital to go to, if I had to go. But I'd

still rather not go to hospital in the first place.

After all, statistics show

clearly that more people die in the average hospital than in the average home.

A note about statistics

At one stage in my working life, allegedly professional colleagues would come to see me for help with “crunching their numbers”, but a statistic is a number that gives you a quick summary. Quite often they only had the summary numbers, and the original data had been tossed out. Whatever you do, never, ever, ever throw away any of the data, until well after all of the analysis and discussion is finished!

To search this blog, use this link and then use the search box